Spark程序在启动后会在控制台打印大量日志,找了很多教程也没有解决,本来一直可以忍受的。但是学SparkStreaming时实在受不了了,日志已经严重影响到我查看计算结果。遂痛下决心,解决这个一直困扰我的问题。如果使用方法1没有解决的可以直接去看第三步,第二步是解决日志依赖冲突的问题的

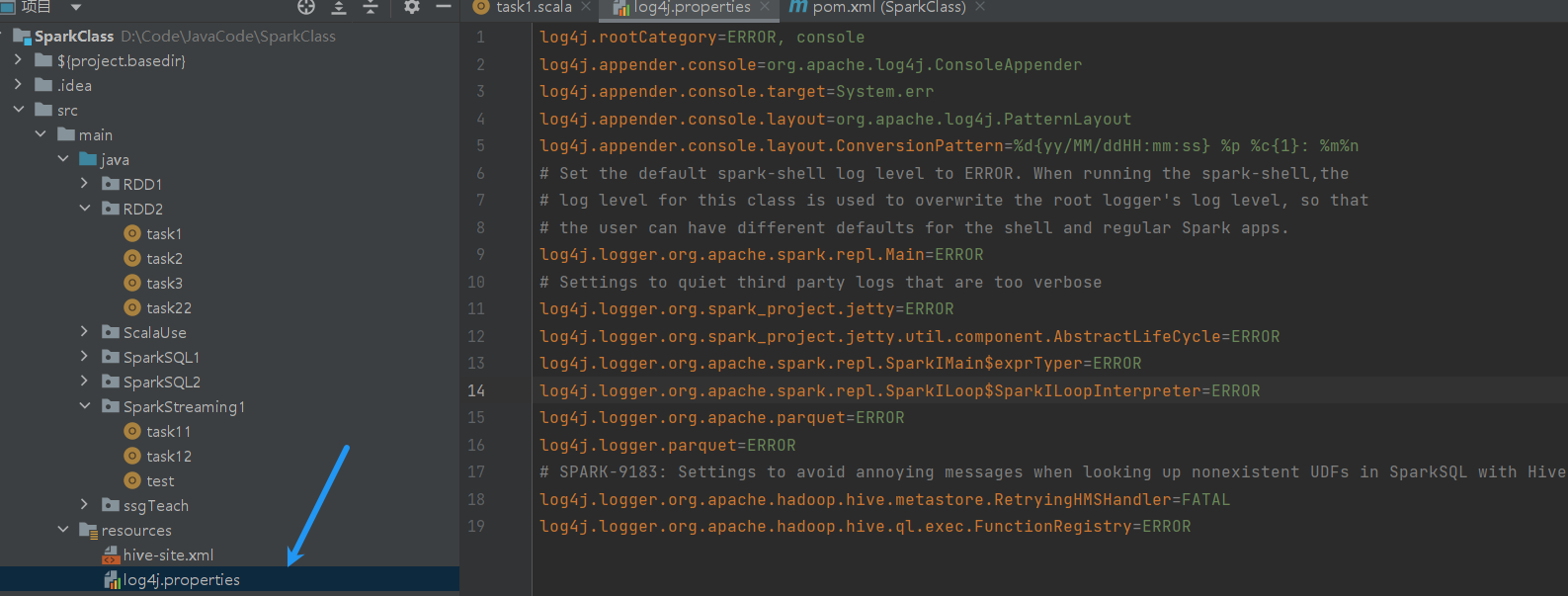

常规解决办法

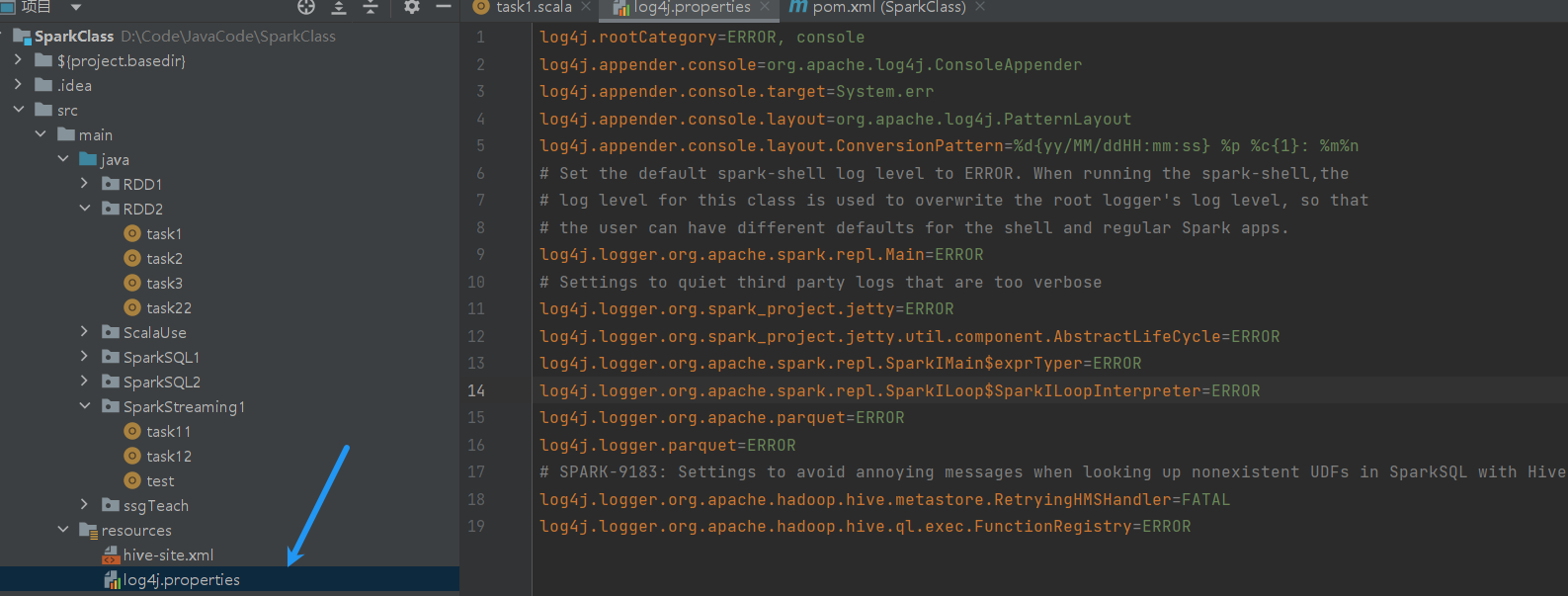

在resource下建立一个log4j.properties,填入下列内容

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

log4j.rootCategory=ERROR, console

log4j.appender.console=org.apache.log4j.ConsoleAppender

log4j.appender.console.target=System.err

log4j.appender.console.layout=org.apache.log4j.PatternLayout

log4j.appender.console.layout.ConversionPattern=%d{yy/MM/ddHH:mm:ss} %p %c{1}: %m%n

# Set the default spark-shell log level to ERROR. When running the spark-shell,the

# log level for this class is used to overwrite the root logger's log level, so that

# the user can have different defaults for the shell and regular Spark apps.

log4j.logger.org.apache.spark.repl.Main=ERROR

# Settings to quiet third party logs that are too verbose

log4j.logger.org.spark_project.jetty=ERROR

log4j.logger.org.spark_project.jetty.util.component.AbstractLifeCycle=ERROR

log4j.logger.org.apache.spark.repl.SparkIMain$exprTyper=ERROR

log4j.logger.org.apache.spark.repl.SparkILoop$SparkILoopInterpreter=ERROR

log4j.logger.org.apache.parquet=ERROR

log4j.logger.parquet=ERROR

# SPARK-9183: Settings to avoid annoying messages when looking up nonexistent UDFs in SparkSQL with Hive support

log4j.logger.org.apache.hadoop.hive.metastore.RetryingHMSHandler=FATAL

log4j.logger.org.apache.hadoop.hive.ql.exec.FunctionRegistry=ERROR

|

查看日志寻求解决办法

常规解决办法没有正常解决,遂查看日志寻求解决办法,查看日志可以明显看出有日志依赖冲突

1

2

3

4

5

|

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/D:/maven-3.8.6/respository/org/apache/logging/log4j/log4j-slf4j-impl/2.17.2/log4j-slf4j-impl-2.17.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/D:/maven-3.8.6/respository/org/slf4j/slf4j-reload4j/1.7.36/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

|

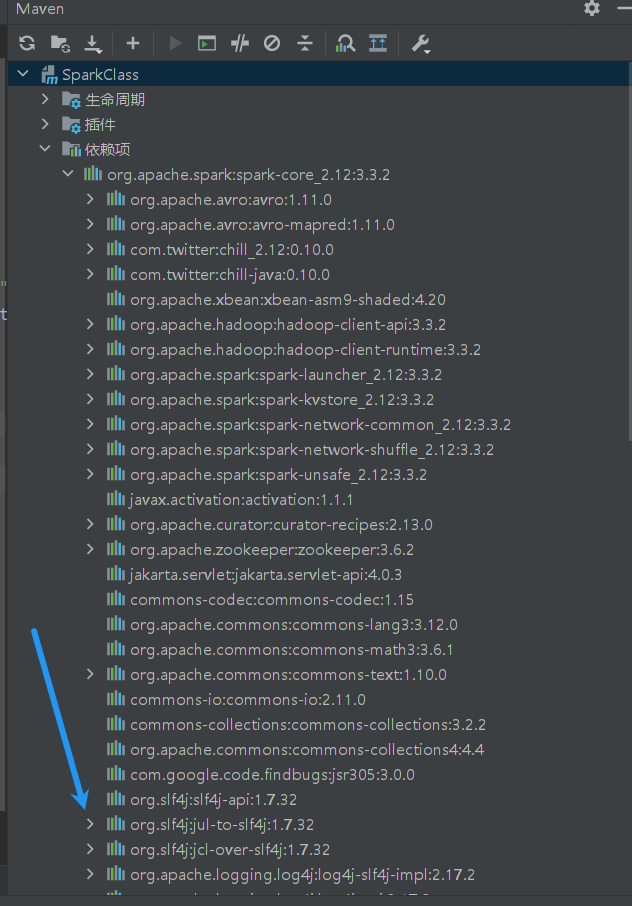

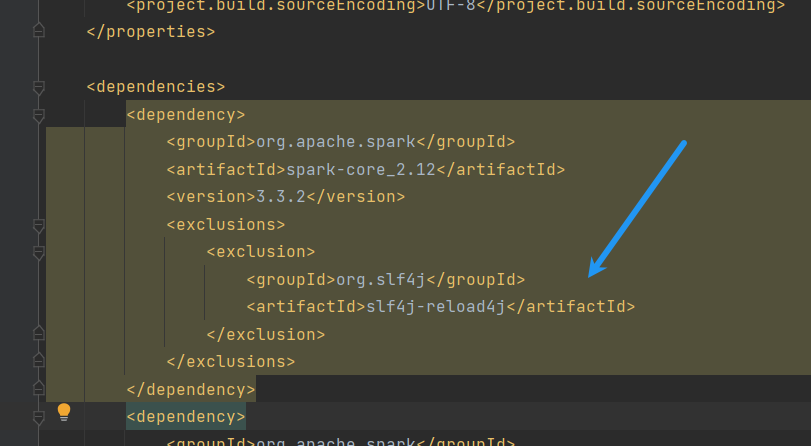

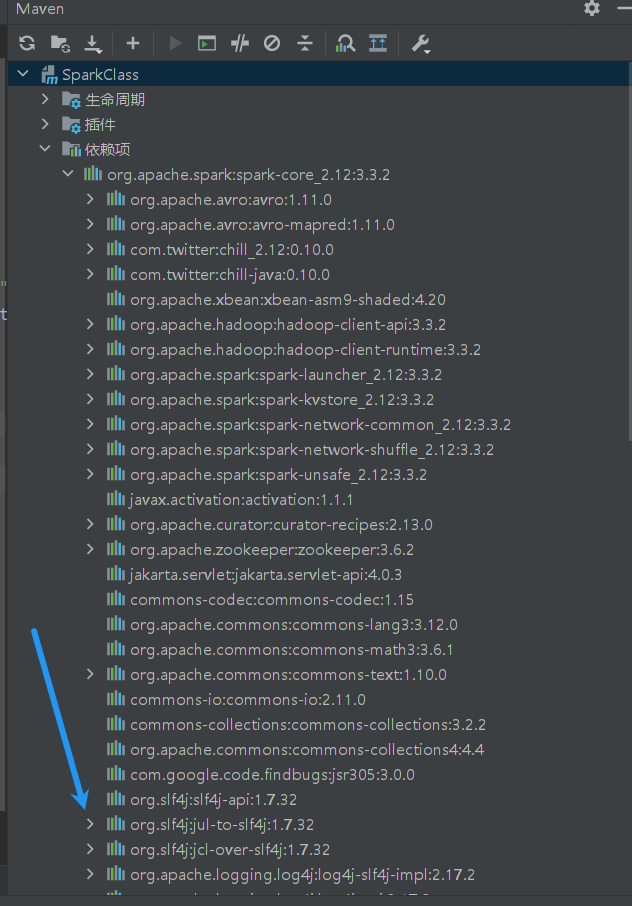

怀疑是日志冲突的问题(事实证明不是),分析日志依赖

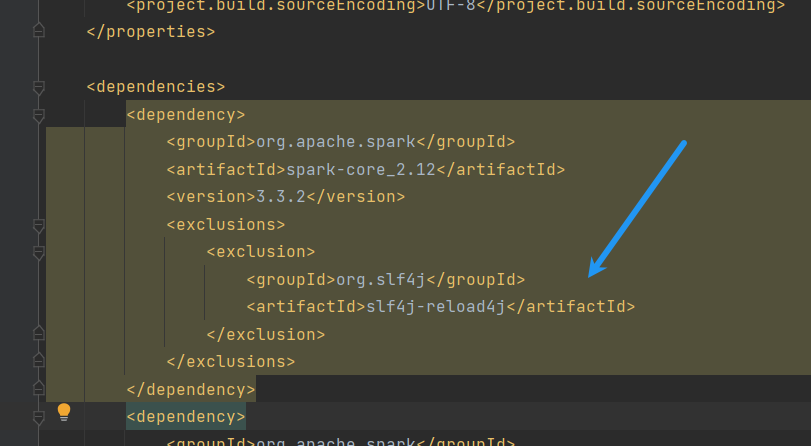

在SparkCore下面发现了slf4j的依赖,在spark-core的dependency里加入下列内容以屏蔽日志包。

1

2

3

4

5

6

|

<exclusions>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-reload4j</artifactId>

</exclusion>

</exclusions>

|

还是日志冲突,把所有dependency下面都加了排除日志依赖的标签

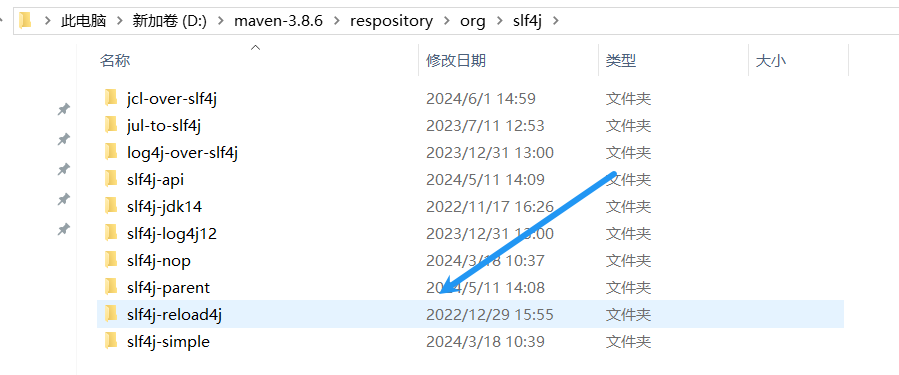

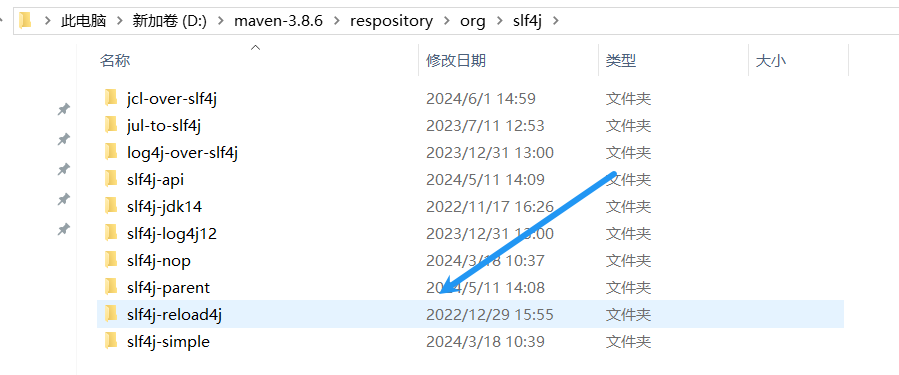

还是不起作用,观察日志可知冲突是因为reload4hj,干脆把仓库里的reload4j删掉,成功解决日志冲突

还是日志冲突,把所有dependency下面都加了排除日志依赖的标签

还是不起作用,观察日志可知冲突是因为reload4hj,干脆把仓库里的reload4j删掉,成功解决日志冲突

但是但是,日志依赖冲突的问题解决了,大量info日志的问题却还在

但是但是,日志依赖冲突的问题解决了,大量info日志的问题却还在

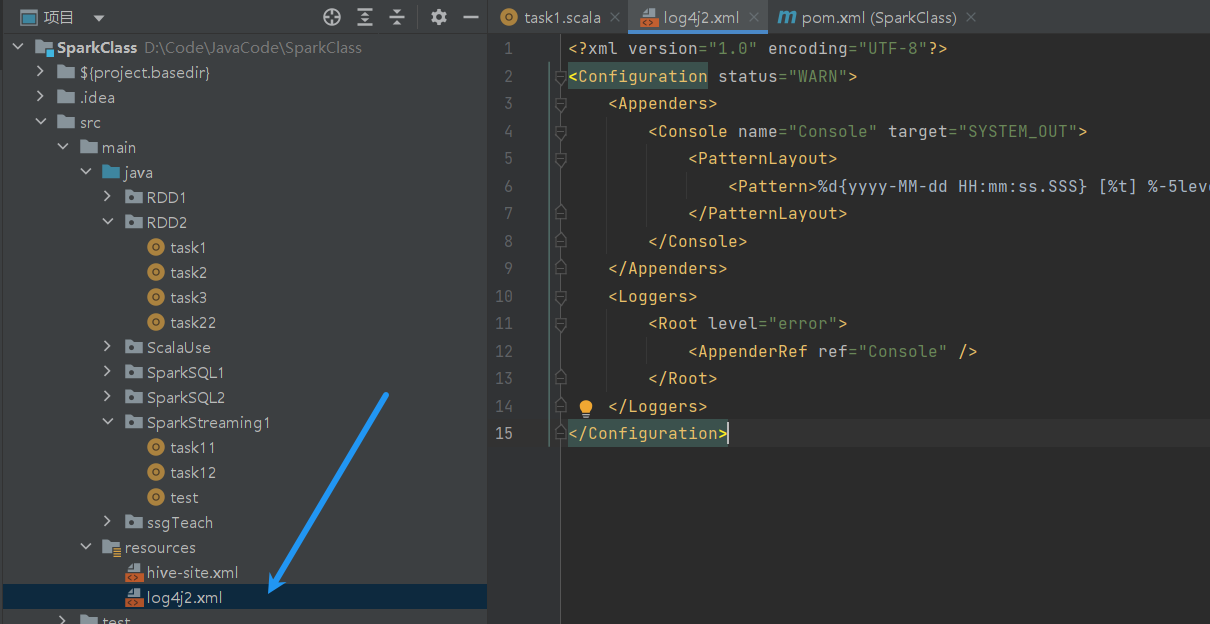

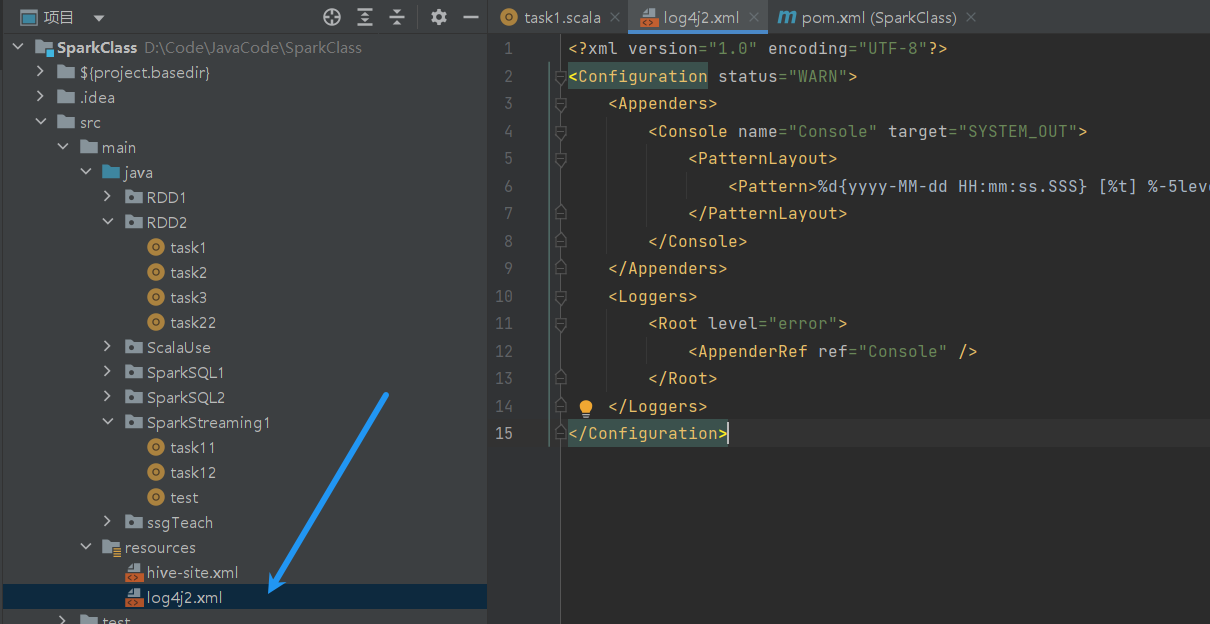

最终解决办法

在resource下新建一个log4j2.xml文件,填入下面内容即可解决。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

<?xml version="1.0" encoding="UTF-8"?>

<Configuration status="WARN">

<Appenders>

<Console name="Console" target="SYSTEM_OUT">

<PatternLayout>

<Pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%t] %-5level %logger{36} - %msg%n</Pattern>

</PatternLayout>

</Console>

</Appenders>

<Loggers>

<Root level="error">

<AppenderRef ref="Console" />

</Root>

</Loggers>

</Configuration>

|

还是日志冲突,把所有dependency下面都加了排除日志依赖的标签

还是不起作用,观察日志可知冲突是因为reload4hj,干脆把仓库里的reload4j删掉,成功解决日志冲突

还是日志冲突,把所有dependency下面都加了排除日志依赖的标签

还是不起作用,观察日志可知冲突是因为reload4hj,干脆把仓库里的reload4j删掉,成功解决日志冲突

但是但是,日志依赖冲突的问题解决了,大量info日志的问题却还在

但是但是,日志依赖冲突的问题解决了,大量info日志的问题却还在